mechanistic interpretability is mainly reverse engineering a trained DL model to peek into what it’s looking at in order to perform the task in hand

Github Repo: Tickling-Vision-Models

core concepts:

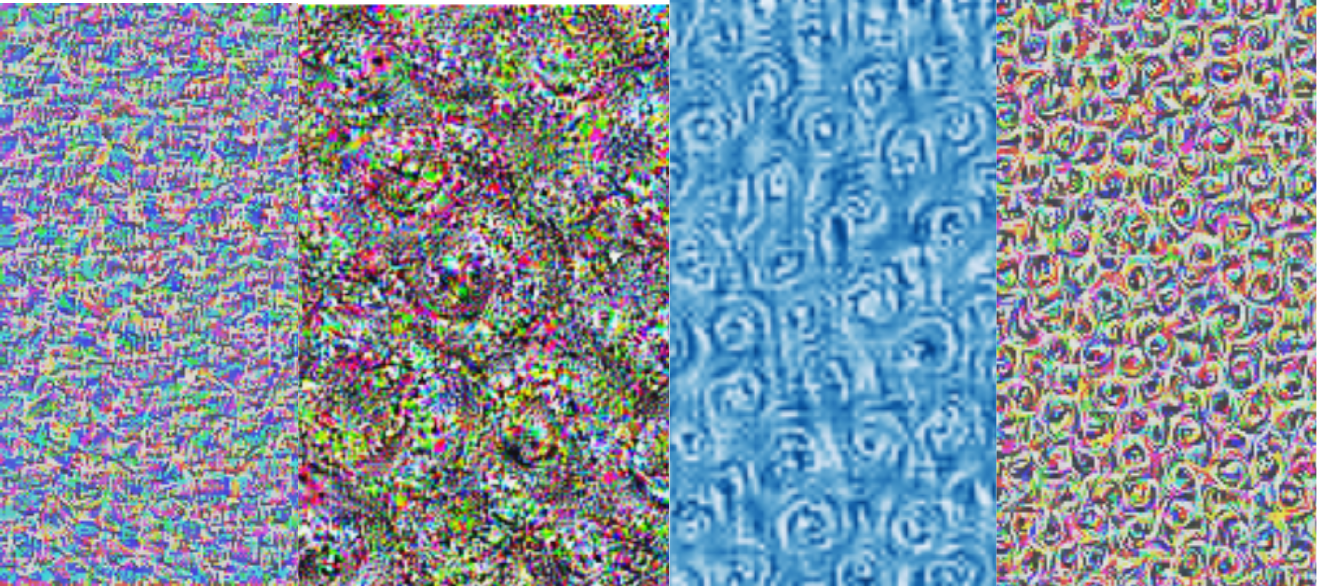

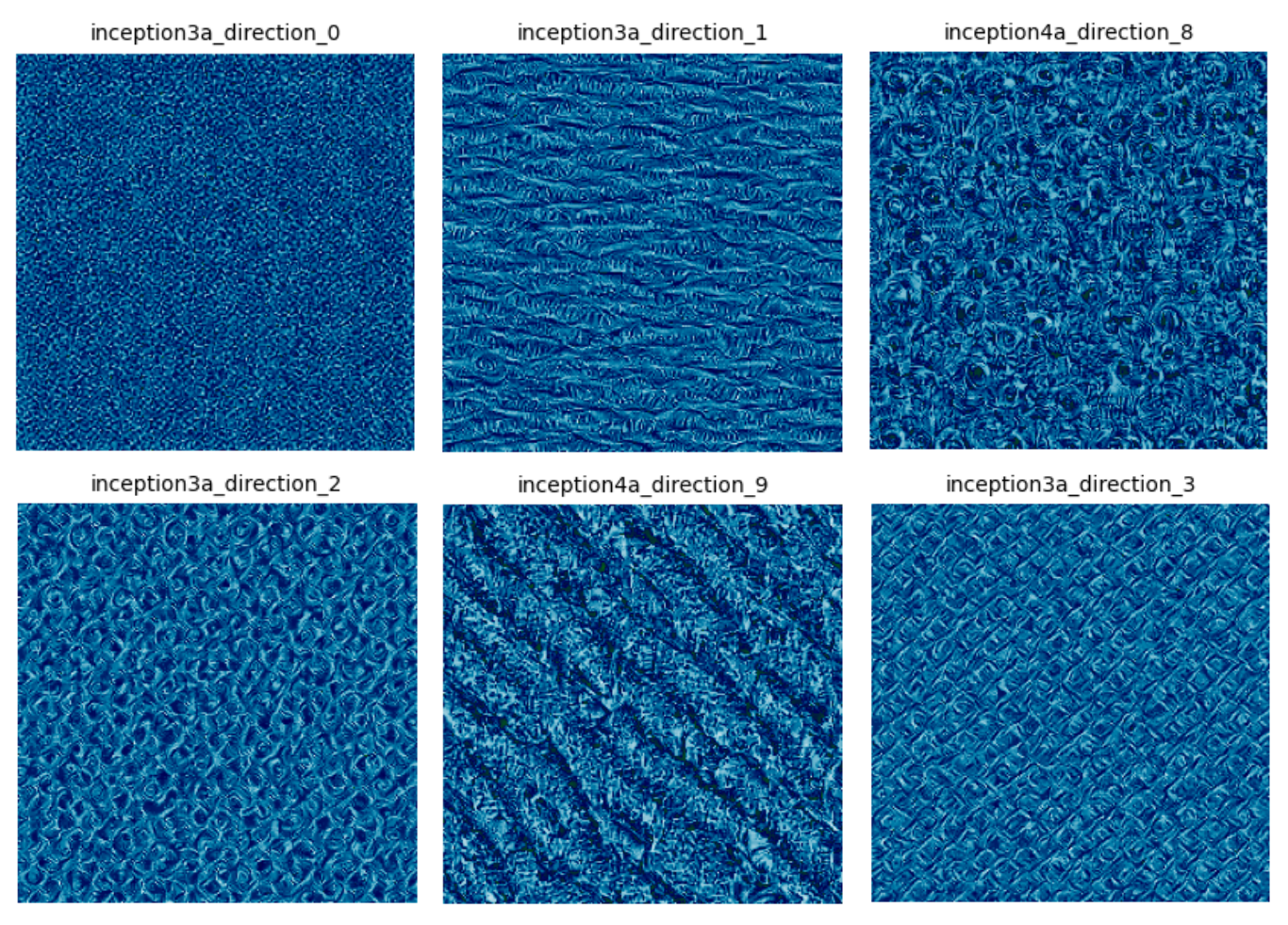

feature visualization (activation maximization)

using Gradient Ascent to identify images / samples that maximize a selected circuit (: neuron/ conv channel/ layer/ …)

where R(x) is a regularization term could be:

…

Linear Probing

train a linear classifier on activations to get an intuition of manifold shape: learned representation

SAE decomposition

learning a disentangle latent representation by encouraging sparsity in a lower manifold

Feature Manifold & Geometry

manifold cluster based on feature : subset of points sharing

local dimension via PCA:

curvature: flat manifold → smooth travel on surface

curved manifold → bumpy ride

(a short step might jump to a semantically different region)

components

: how to measure lengths & angles in a curved manifold

Geodesic distance: walking on the manifold

Euclidian distance: walking in a straight path (through the manifold)

connectedness: all x containing F reachable through a smooth shift / interpolation

test: interpolation effect on classification / feature identification & activation

Adversarial Examples (FGSM & PGD) & mechanistic view

adversarial perturbation introduces a change in activation space: that can be decomposed using an SAE ⇒ determine hijacked circuits by learning the sparse representation

Adversarial Path:

An adversarial path is a continuous trajectory through input space (or representation space) that starts at one label and ends at another, while staying imperceptible or minimally different to a human observer.

formal definition:

clustering Activation atlases:

looking for fractures as multi-cluster concepts

Circuit analysis techniques:

ablation:

circuit silence downstream effect

patching:

replacing a subset of activations for target images from a donor image

feature visualization in learned sparse representation

Metrics:

probe accuracy vs chance baseline

reconstruction loss: MSE on SAE

adversarial success rate

effect size in ablation/patching

clustering purity

to check

GIG (PFVs, ERFs)

causal tracing (BLIP)

TCAV: Testing with Concept Activation Vectors

drawing a linear decision boundary in latent space of a selected layer of the model / network separating samples with feature F from samples without it

CAV formally:

given : logits for class i, c: concept, l: layer

how V is aligned with the mentioned gradient reflect the concept contribution / correspondence to that class

Integrated Gradient

let be a baseline input (mean / zero image)

Layer Integrated Gradient

let

Generalized Integrated Gradient

let be a smooth path

Discretized Integrated Gradient:

GIG using a discrete path (?): common in NLP

Concept localization in hidden layers

CAV projected IG score per layer

TCAV: sign consistency of batch of samples

concepts vs layers heatmap / matrix

Concept Flow throughout the network

concept score through layers:

PFV: Pointwise Feature Vector

CAV per spatial point, no GAP

Dimensionality Reduction x High Dimensions projection (intrinsic dims extraction):

(to elaborate on/ note)

PCA

ICA

KPCA

t-SNE

UMAP